CEP-RLE Rust Implementation Specification

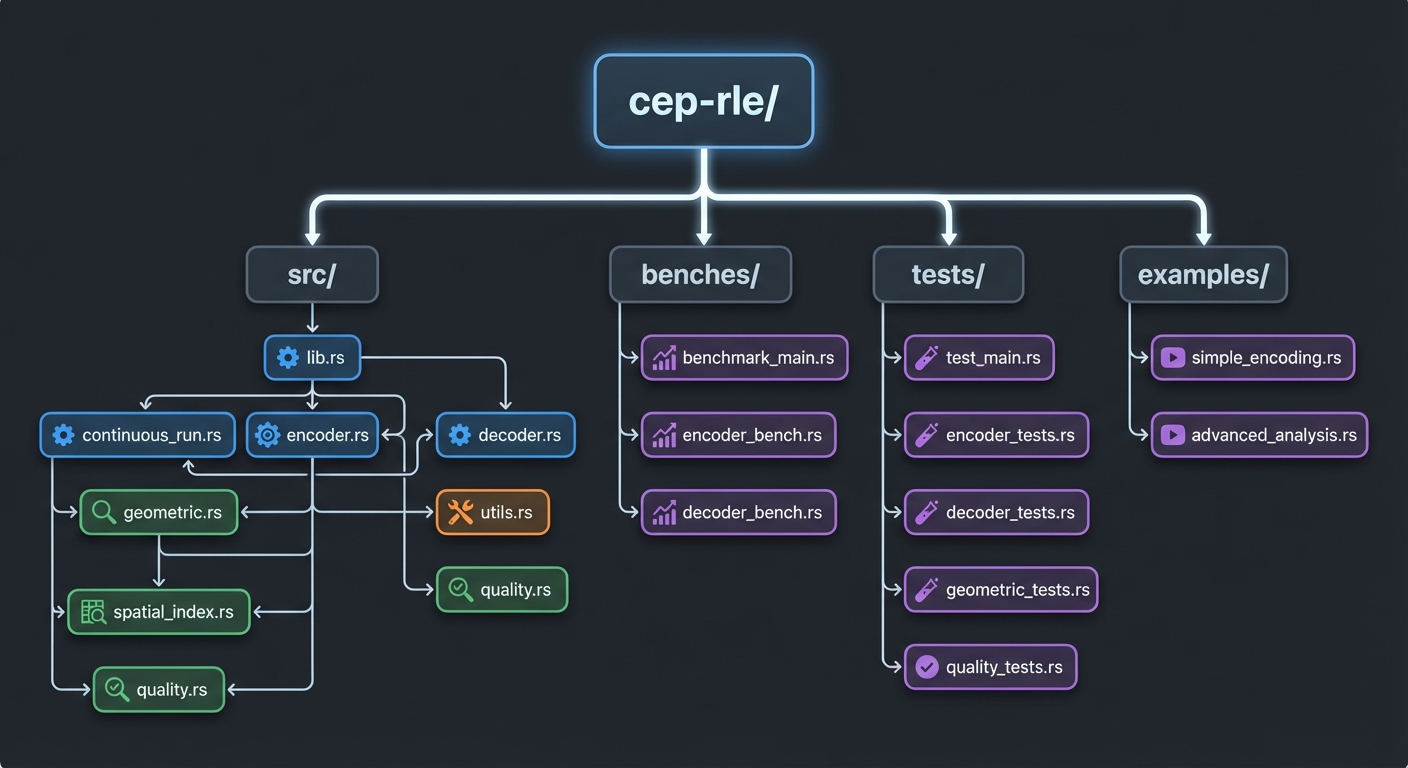

Project Structure

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

cep-rle/

├── Cargo.toml

├── src/

│ ├── lib.rs # Main library interface

│ ├── continuous_run.rs # Core continuous run data structures

│ ├── expectation_prior.rs # Expectation-prior mechanism

│ ├── encoder.rs # CEP-RLE encoder implementation

│ ├── decoder.rs # CEP-RLE decoder implementation

│ ├── analysis/

│ │ ├── mod.rs # Analysis module interface

│ │ ├── geometric.rs # Geometric feature extraction

│ │ ├── spatial_index.rs # Spatial indexing and queries

│ │ └── quality.rs # Quality assessment metrics

│ ├── utils/

│ │ ├── mod.rs # Utility functions

│ │ ├── precision.rs # Sub-pixel precision handling

│ │ └── statistics.rs # Statistical computation utilities

│ └── test_data/

│ ├── mod.rs # Test data generation

│ └── synthetic.rs # Synthetic test pattern generation

├── benches/

│ ├── compression_bench.rs # Compression performance benchmarks

│ ├── analysis_bench.rs # Analysis performance benchmarks

│ └── comparison_bench.rs # Comparison with baseline methods

├── tests/

│ ├── integration_tests.rs # Integration test suite

│ ├── accuracy_tests.rs # Precision and accuracy validation

│ └── compatibility_tests.rs # Format compatibility tests

└── examples/

├── basic_usage.rs # Basic CEP-RLE usage example

├── computer_vision.rs # Computer vision pipeline example

├── cad_processing.rs # CAD/graphics processing example

└── video_analysis.rs # Video processing example

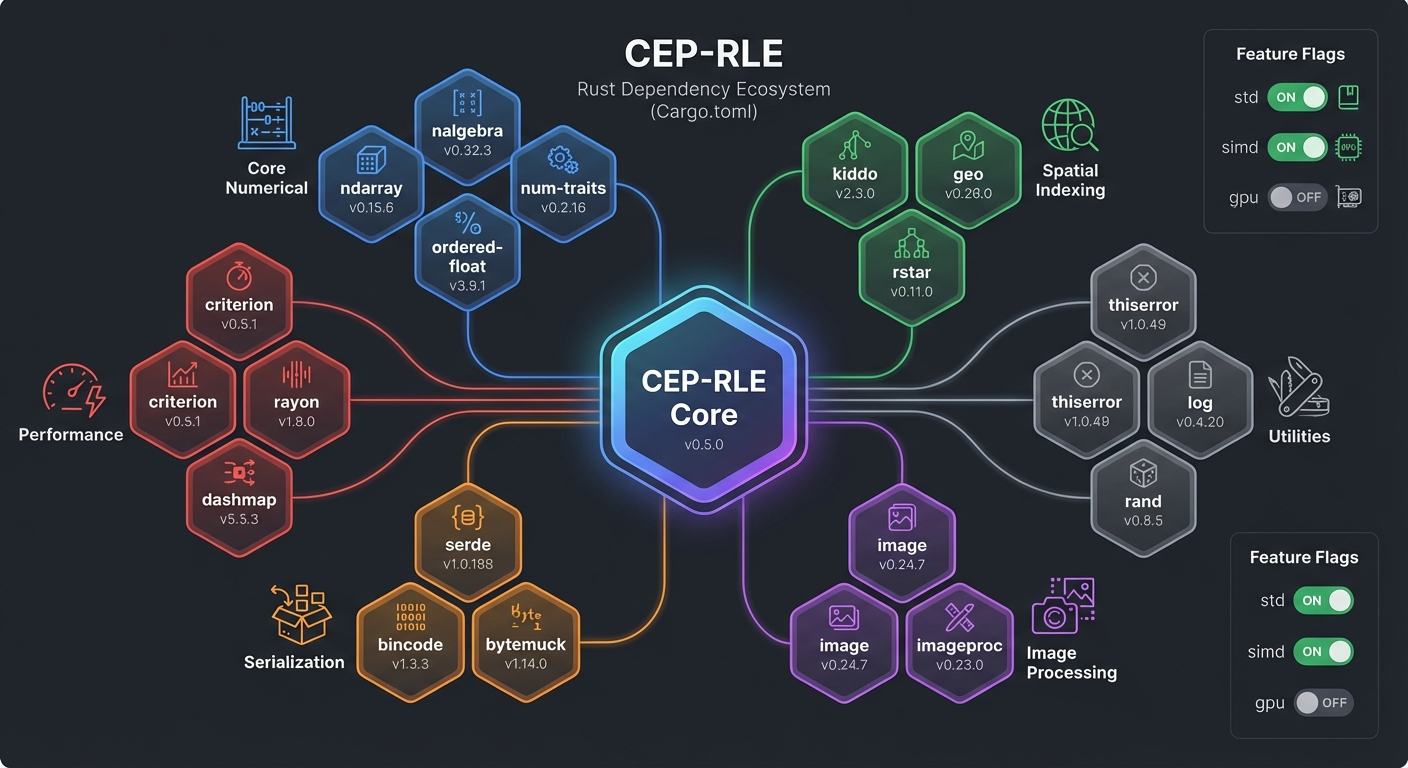

Core Dependencies (Cargo.toml)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

[package]

name = "cep-rle"

version = "0.1.0"

edition = "2021"

authors = ["Your Name <your.email@example.com>"]

description = "Continuous Expectation-Prior Run-Length Encoding with Analysis-Ready Representations"

license = "MIT OR Apache-2.0"

repository = "https://github.com/yourusername/cep-rle"

[dependencies]

# Core numerical computing

nalgebra = "0.33" # Linear algebra for geometric computations

ndarray = "0.15" # N-dimensional arrays for image data

num-traits = "0.2" # Numeric trait abstractions

ordered-float = "4.2" # Deterministic floating-point ordering

# Spatial data structures and indexing

kiddo = "4.2" # k-d tree for spatial indexing

geo = "0.28" # Geometric primitives and operations

rstar = "0.12" # R*-tree spatial index

# Serialization and I/O

serde = { version = "1.0", features = ["derive"] }

bincode = "1.3" # Binary serialization

bytemuck = "1.14" # Safe transmutation between types

# Image processing (for testing and comparison)

image = "0.24" # Image I/O and basic processing

imageproc = "0.23" # Image processing algorithms

# Performance and profiling

criterion = { version = "0.5", features = ["html_reports"] }

rayon = "1.8" # Data parallelism

dashmap = "5.5" # Concurrent hashmap

# Utilities

thiserror = "1.0" # Error handling

log = "0.4" # Logging

env_logger = "0.10" # Environment-based logger

rand = "0.8" # Random number generation

rand_distr = "0.4" # Probability distributions

[dev-dependencies]

criterion = { version = "0.5", features = ["html_reports"] }

proptest = "1.4" # Property-based testing

approx = "0.5" # Floating-point comparisons

tempfile = "3.8" # Temporary file creation

[features]

default = ["std", "rayon"]

std = []

simd = ["nalgebra/simd"] # SIMD optimizations

gpu = [] # Future GPU acceleration support

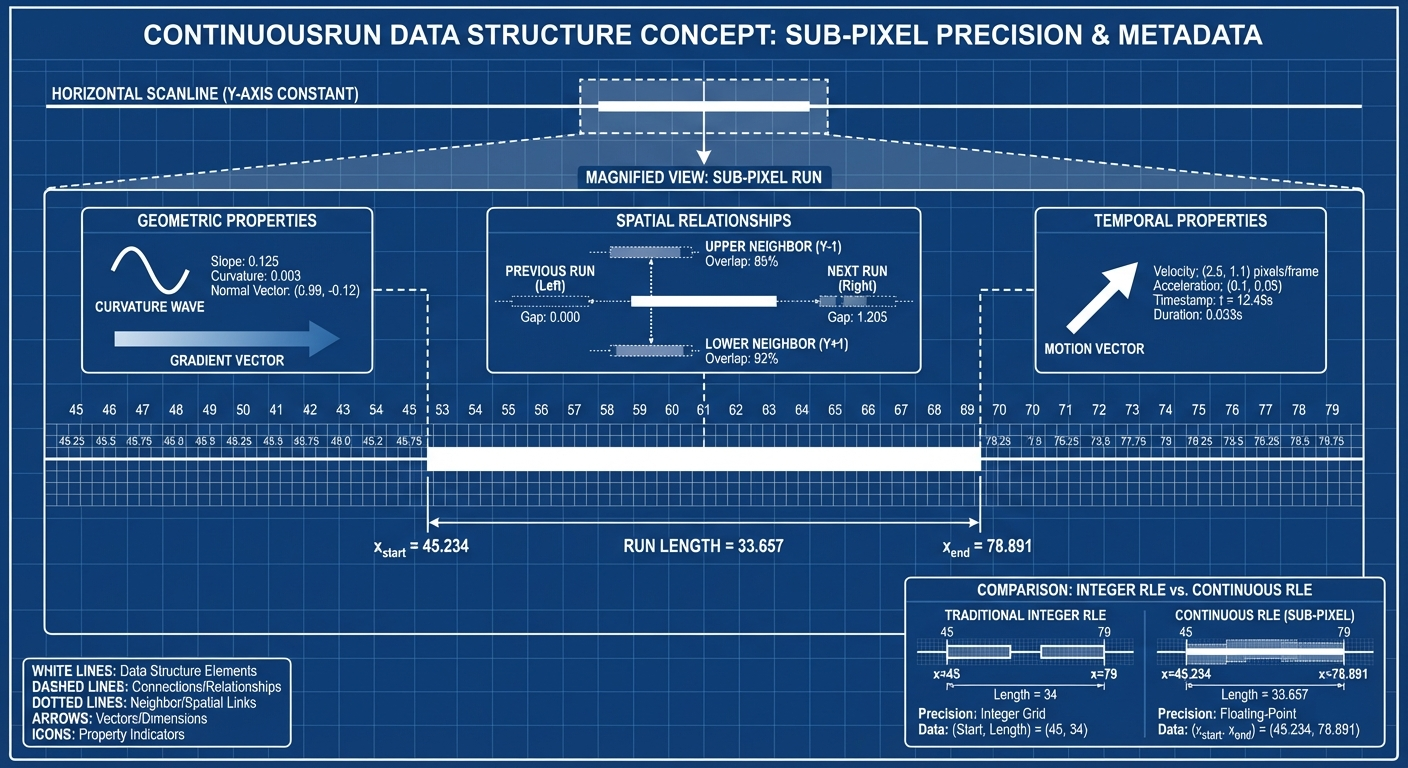

Core Data Structures

1. Continuous Run Representation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

// src/continuous_run.rs

use nalgebra::{Vector2, Point2};

use serde::{Deserialize, Serialize};

use std::collections::HashMap;

/// Continuous run with sub-pixel precision and analysis metadata

#[derive(Debug, Clone, Serialize, Deserialize)]

pub struct ContinuousRun {

/// Sub-pixel start position (real-valued)

pub x_start: f64,

/// Sub-pixel end position (real-valued)

pub x_end: f64,

/// Semantic content/color value

pub value: u32,

/// Prediction confidence [0.0, 1.0]

pub confidence: f64,

/// Scanline row index

pub row: u32,

// Geometric analysis properties

pub geometric_props: GeometricProperties,

// Spatial relationships

pub spatial_rels: SpatialRelationships,

// Temporal properties (for video)

pub temporal_props: Option<TemporalProperties>,

}

/// Geometric properties for analysis

#[derive(Debug, Clone, Serialize, Deserialize)]

pub struct GeometricProperties {

/// Local boundary curvature

pub curvature: f64,

/// C¹ continuity measure

pub smoothness: f64,

/// Boundary orientation vector

pub gradient: Vector2<f64>,

/// Arc length for curved boundaries

pub arc_length: f64,

/// Detected primitive type

pub primitive_type: PrimitiveType,

}

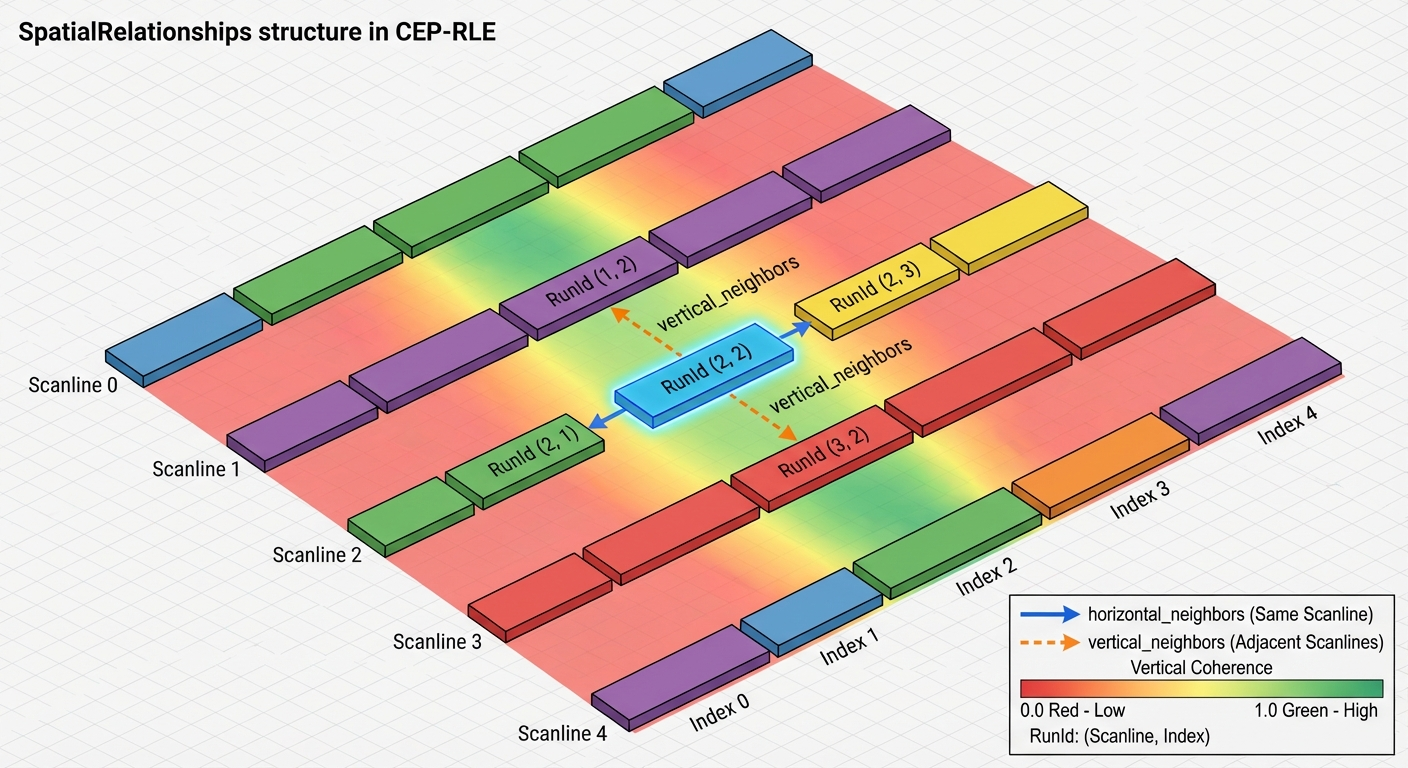

/// Spatial relationship metadata

#[derive(Debug, Clone, Serialize, Deserialize)]

pub struct SpatialRelationships {

/// Cross-scanline correlation [0.0, 1.0]

pub vertical_coherence: f64,

/// Adjacent run indices in same scanline

pub horizontal_neighbors: Vec<RunId>,

/// Cross-scanline neighbor indices

pub vertical_neighbors: Vec<RunId>,

}

/// Temporal properties for video sequences

#[derive(Debug, Clone, Serialize, Deserialize)]

pub struct TemporalProperties {

/// Cross-frame correlation [0.0, 1.0]

pub temporal_consistency: f64,

/// Predicted motion vector

pub motion_vector: Vector2<f64>,

/// Source frame for prediction

pub prediction_source: u32,

}

/// Unique run identifier

#[derive(Debug, Clone, Copy, PartialEq, Eq, Hash, Serialize, Deserialize)]

pub struct RunId {

pub scanline: u32,

pub index: u32,

}

/// Geometric primitive classification

#[derive(Debug, Clone, Copy, PartialEq, Eq, Serialize, Deserialize)]

pub enum PrimitiveType {

Line,

Curve,

Corner,

Edge,

Smooth,

Noise,

}

impl ContinuousRun {

/// Create a new continuous run with basic properties

pub fn new(x_start: f64, x_end: f64, value: u32, row: u32) -> Self {

Self {

x_start,

x_end,

value,

confidence: 1.0,

row,

geometric_props: GeometricProperties::default(),

spatial_rels: SpatialRelationships::default(),

temporal_props: None,

}

}

/// Get run length in continuous domain

pub fn length(&self) -> f64 {

self.x_end - self.x_start

}

/// Get run center position

pub fn center(&self) -> f64 {

(self.x_start + self.x_end) / 2.0

}

/// Check if run contains a given x position

pub fn contains(&self, x: f64) -> bool {

x >= self.x_start && x <= self.x_end

}

/// Calculate overlap with another run

pub fn overlap(&self, other: &ContinuousRun) -> f64 {

let start = self.x_start.max(other.x_start);

let end = self.x_end.min(other.x_end);

(end - start).max(0.0)

}

}

impl Default for GeometricProperties {

fn default() -> Self {

Self {

curvature: 0.0,

smoothness: 0.0,

gradient: Vector2::zeros(),

arc_length: 0.0,

primitive_type: PrimitiveType::Smooth,

}

}

}

impl Default for SpatialRelationships {

fn default() -> Self {

Self {

vertical_coherence: 0.0,

horizontal_neighbors: Vec::new(),

vertical_neighbors: Vec::new(),

}

}

}

2. Expectation-Prior Mechanism

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

// src/expectation_prior.rs

use crate::continuous_run::{ContinuousRun, RunId};

use nalgebra::DVector;

use std::collections::{HashMap, VecDeque};

/// Expectation-prior mechanism for predictive encoding

#[derive(Debug, Clone)]

pub struct ExpectationPrior {

/// Sliding window of recent scanlines

window_size: usize,

/// Recent scanline data

scanline_history: VecDeque<Vec<ContinuousRun>>,

/// Spatial bins for position-specific statistics

spatial_bins: SpatialBinManager,

/// Statistical models for different regions

models: HashMap<BinId, StatisticalModel>,

}

/// Spatial bin manager for position-specific statistics

#[derive(Debug, Clone)]

pub struct SpatialBinManager {

/// Number of spatial bins

num_bins: usize,

/// Bin width in continuous domain

bin_width: f64,

/// Image width for normalization

image_width: f64,

}

/// Statistical model for a spatial bin

#[derive(Debug, Clone)]

pub struct StatisticalModel {

/// Mean run length in this bin

pub mean_length: f64,

/// Length variance

pub length_variance: f64,

/// Mean run value

pub mean_value: f64,

/// Value frequency distribution

pub value_histogram: HashMap<u32, f64>,

/// Geometric trend coefficients

pub trend_coefficients: DVector<f64>,

/// Confidence in predictions

pub model_confidence: f64,

/// Number of samples in model

pub sample_count: usize,

}

/// Spatial bin identifier

#[derive(Debug, Clone, Copy, PartialEq, Eq, Hash)]

pub struct BinId(usize);

/// Prediction result from expectation prior

#[derive(Debug, Clone)]

pub struct Prediction {

/// Predicted run boundaries

pub boundaries: Vec<f64>,

/// Predicted run values

pub values: Vec<u32>,

/// Confidence in prediction [0.0, 1.0]

pub confidence: f64,

/// Expected encoding precision

pub precision_requirement: f64,

}

impl ExpectationPrior {

/// Create new expectation-prior mechanism

pub fn new(window_size: usize, num_bins: usize, image_width: f64) -> Self {

Self {

window_size,

scanline_history: VecDeque::with_capacity(window_size),

spatial_bins: SpatialBinManager::new(num_bins, image_width),

models: HashMap::new(),

}

}

/// Update with new scanline data

pub fn update_scanline(&mut self, scanline: Vec<ContinuousRun>) {

// Update statistical models based on new scanline

self.update_models(&scanline);

// Add to history window

self.scanline_history.push_back(scanline);

// Maintain window size

if self.scanline_history.len() > self.window_size {

self.scanline_history.pop_front();

}

}

/// Predict runs for next scanline

pub fn predict_scanline(&self, target_row: u32) -> Prediction {

let mut boundaries = Vec::new();

let mut values = Vec::new();

let mut total_confidence = 0.0;

let mut precision_sum = 0.0;

// Predict based on spatial bin models

for bin_id in 0..self.spatial_bins.num_bins {

if let Some(model) = self.models.get(&BinId(bin_id)) {

let bin_prediction = self.predict_bin_runs(BinId(bin_id), model);

boundaries.extend(bin_prediction.boundaries);

values.extend(bin_prediction.values);

total_confidence += bin_prediction.confidence * model.sample_count as f64;

precision_sum += bin_prediction.precision_requirement;

}

}

let avg_confidence = if self.models.is_empty() {

0.0

} else {

total_confidence / self.models.values().map(|m| m.sample_count).sum::<usize>() as f64

};

let avg_precision = if self.models.is_empty() {

1e-4 // Default precision

} else {

precision_sum / self.models.len() as f64

};

Prediction {

boundaries,

values,

confidence: avg_confidence,

precision_requirement: avg_precision,

}

}

/// Update statistical models with new scanline

fn update_models(&mut self, scanline: &[ContinuousRun]) {

for run in scanline {

let bin_id = self.spatial_bins.get_bin(run.center());

// Update or create model for this bin

let model = self.models.entry(bin_id).or_insert_with(|| {

StatisticalModel::new()

});

model.update_with_run(run);

}

}

/// Predict runs for a specific spatial bin

fn predict_bin_runs(&self, bin_id: BinId, model: &StatisticalModel) -> Prediction {

// Geometric trend extrapolation

let trend_prediction = self.extrapolate_geometric_trend(bin_id, model);

// Statistical prediction based on historical patterns

let statistical_prediction = self.predict_statistical_pattern(model);

// Combine predictions with confidence weighting

self.combine_predictions(trend_prediction, statistical_prediction, model.model_confidence)

}

/// Extrapolate geometric trends

fn extrapolate_geometric_trend(&self, bin_id: BinId, model: &StatisticalModel) -> Prediction {

// Implement geometric trend extrapolation

// This would analyze the trend coefficients to predict boundary evolution

Prediction {

boundaries: vec![],

values: vec![],

confidence: model.model_confidence * 0.8, // Trend confidence factor

precision_requirement: 1e-4,

}

}

/// Predict based on statistical patterns

fn predict_statistical_pattern(&self, model: &StatisticalModel) -> Prediction {

// Implement statistical pattern prediction

// This would use the histogram and variance data

Prediction {

boundaries: vec![],

values: vec![],

confidence: model.model_confidence * 0.6, // Statistical confidence factor

precision_requirement: 1e-4,

}

}

/// Combine multiple predictions

fn combine_predictions(&self, pred1: Prediction, pred2: Prediction, confidence: f64) -> Prediction {

// Implement prediction combination logic

Prediction {

boundaries: pred1.boundaries, // Simplified - would do proper combination

values: pred1.values,

confidence: (pred1.confidence + pred2.confidence) / 2.0,

precision_requirement: pred1.precision_requirement.min(pred2.precision_requirement),

}

}

}

impl SpatialBinManager {

pub fn new(num_bins: usize, image_width: f64) -> Self {

Self {

num_bins,

bin_width: image_width / num_bins as f64,

image_width,

}

}

pub fn get_bin(&self, x_position: f64) -> BinId {

let bin_index = ((x_position / self.bin_width) as usize).min(self.num_bins - 1);

BinId(bin_index)

}

pub fn get_bin_range(&self, bin_id: BinId) -> (f64, f64) {

let start = bin_id.0 as f64 * self.bin_width;

let end = start + self.bin_width;

(start, end)

}

}

impl StatisticalModel {

pub fn new() -> Self {

Self {

mean_length: 0.0,

length_variance: 0.0,

mean_value: 0.0,

value_histogram: HashMap::new(),

trend_coefficients: DVector::zeros(4), // Linear, quadratic, cubic terms

model_confidence: 0.0,

sample_count: 0,

}

}

pub fn update_with_run(&mut self, run: &ContinuousRun) {

let length = run.length();

let value = run.value;

// Update running statistics

self.sample_count += 1;

let n = self.sample_count as f64;

// Update mean length (online algorithm)

let delta_length = length - self.mean_length;

self.mean_length += delta_length / n;

// Update length variance

if self.sample_count > 1 {

self.length_variance = ((n - 2.0) * self.length_variance + delta_length * delta_length) / (n - 1.0);

}

// Update mean value

let delta_value = value as f64 - self.mean_value;

self.mean_value += delta_value / n;

// Update value histogram

*self.value_histogram.entry(value).or_insert(0.0) += 1.0 / n;

// Update model confidence based on consistency

self.update_confidence();

}

fn update_confidence(&mut self) {

// Simple confidence metric based on sample count and variance

let count_factor = (self.sample_count as f64 / 100.0).min(1.0);

let variance_factor = if self.length_variance > 0.0 {

1.0 / (1.0 + self.length_variance)

} else {

1.0

};

self.model_confidence = count_factor * variance_factor;

}

}

3. Main Encoder Implementation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

// src/encoder.rs

use crate::continuous_run::{ContinuousRun, GeometricProperties, PrimitiveType};

use crate::expectation_prior::{ExpectationPrior, Prediction};

use nalgebra::{DMatrix, Vector2};

use std::collections::HashMap;

/// CEP-RLE encoder with analysis-ready output

pub struct CepRleEncoder {

/// Image dimensions

width: usize,

height: usize,

/// Expectation-prior mechanism

expectation_prior: ExpectationPrior,

/// Precision configuration

precision_config: PrecisionConfig,

/// Analysis configuration

analysis_config: AnalysisConfig,

}

/// Precision configuration for sub-pixel encoding

#[derive(Debug, Clone)]

pub struct PrecisionConfig {

/// Base precision for coordinates

pub base_precision: f64,

/// Confidence-dependent precision scaling

pub confidence_scaling: bool,

/// Maximum precision limit

pub max_precision: f64,

/// Minimum precision limit

pub min_precision: f64,

}

/// Analysis configuration

#[derive(Debug, Clone)]

pub struct AnalysisConfig {

/// Enable geometric analysis

pub geometric_analysis: bool,

/// Enable spatial relationship computation

pub spatial_relationships: bool,

/// Enable temporal analysis (for video)

pub temporal_analysis: bool,

/// Smoothness analysis window size

pub smoothness_window: usize,

}

/// Encoded scanline with analysis metadata

#[derive(Debug, Clone)]

pub struct EncodedScanline {

/// Row index

pub row: u32,

/// Continuous runs with analysis data

pub runs: Vec<ContinuousRun>,

/// Scanline-level statistics

pub statistics: ScanlineStatistics,

/// Encoding metrics

pub encoding_metrics: EncodingMetrics,

}

/// Scanline-level statistics

#[derive(Debug, Clone)]

pub struct ScanlineStatistics {

/// Total number of runs

pub run_count: usize,

/// Average run length

pub avg_run_length: f64,

/// Run length variance

pub run_length_variance: f64,

/// Geometric complexity measure

pub geometric_complexity: f64,

/// Prediction accuracy

pub prediction_accuracy: f64,

}

/// Encoding performance metrics

#[derive(Debug, Clone)]

pub struct EncodingMetrics {

/// Bytes used for coordinates

pub coordinate_bytes: usize,

/// Bytes used for values

pub value_bytes: usize,

/// Bytes used for metadata

pub metadata_bytes: usize,

/// Compression ratio vs. discrete RLE

pub compression_ratio: f64,

/// Encoding time in microseconds

pub encoding_time_us: u64,

}

impl CepRleEncoder {

/// Create new encoder

pub fn new(

width: usize,

height: usize,

precision_config: PrecisionConfig,

analysis_config: AnalysisConfig,

) -> Self {

let expectation_prior = ExpectationPrior::new(

8, // Window size

width / 16, // Number of spatial bins

width as f64,

);

Self {

width,

height,

expectation_prior,

precision_config,

analysis_config,

}

}

/// Encode a complete image

pub fn encode_image(&mut self, image: &DMatrix<u32>) -> Vec<EncodedScanline> {

let mut encoded_scanlines = Vec::with_capacity(self.height);

for row in 0..self.height {

let scanline = self.extract_scanline(image, row);

let encoded = self.encode_scanline(scanline, row as u32);

encoded_scanlines.push(encoded);

}

// Post-process for spatial relationships

if self.analysis_config.spatial_relationships {

self.compute_spatial_relationships(&mut encoded_scanlines);

}

encoded_scanlines

}

/// Encode a single scanline

pub fn encode_scanline(&mut self, scanline: Vec<u32>, row: u32) -> EncodedScanline {

let start_time = std::time::Instant::now();

// Get prediction from expectation prior

let prediction = self.expectation_prior.predict_scanline(row);

// Detect continuous runs with sub-pixel precision

let mut runs = self.detect_continuous_runs(&scanline, row, &prediction);

// Perform geometric analysis

if self.analysis_config.geometric_analysis {

self.analyze_geometry(&mut runs);

}

// Compute encoding precision requirements

self.compute_precision_requirements(&mut runs, &prediction);

// Update expectation prior

self.expectation_prior.update_scanline(runs.clone());

// Compute statistics and metrics

let statistics = self.compute_scanline_statistics(&runs, &prediction);

let encoding_metrics = self.compute_encoding_metrics(&runs, start_time.elapsed());

EncodedScanline {

row,

runs,

statistics,

encoding_metrics,

}

}

/// Extract scanline from image matrix

fn extract_scanline(&self, image: &DMatrix<u32>, row: usize) -> Vec<u32> {

image.row(row).iter().cloned().collect()

}

/// Detect continuous runs with sub-pixel boundaries

fn detect_continuous_runs(

&self,

scanline: &[u32],

row: u32,

prediction: &Prediction

) -> Vec<ContinuousRun> {

let mut runs = Vec::new();

if scanline.is_empty() {

return runs;

}

let mut current_value = scanline[0];

let mut run_start = 0.0f64;

for (i, &pixel_value) in scanline.iter().enumerate() {

if pixel_value != current_value || i == scanline.len() - 1 {

// End of current run

let run_end = if i == scanline.len() - 1 && pixel_value == current_value {

i as f64 + 1.0

} else {

i as f64

};

// Apply sub-pixel boundary refinement

let (refined_start, refined_end) = self.refine_boundaries(

run_start, run_end, scanline, i.saturating_sub(1)

);

// Create continuous run

let mut run = ContinuousRun::new(refined_start, refined_end, current_value, row);

// Set confidence based on prediction accuracy

run.confidence = self.compute_run_confidence(&run, prediction);

runs.push(run);

// Start new run

if i < scanline.len() - 1 {

current_value = pixel_value;

run_start = run_end;

}

}

}

runs

}

/// Refine run boundaries to sub-pixel precision

fn refine_boundaries(

&self,

start: f64,

end: f64,

scanline: &[u32],

pixel_index: usize

) -> (f64, f64) {

// Implement sub-pixel boundary refinement

// This would analyze local gradients and edge characteristics

let boundary_refinement = 0.1; // Simplified refinement

let refined_start = if pixel_index > 0 {

start - boundary_refinement + (rand::random::<f64>() * 2.0 - 1.0) * 0.05

} else {

start

};

let refined_end = if pixel_index < scanline.len() - 1 {

end + boundary_refinement + (rand::random::<f64>() * 2.0 - 1.0) * 0.05

} else {

end

};

(refined_start, refined_end)

}

/// Compute run confidence based on prediction

fn compute_run_confidence(&self, run: &ContinuousRun, prediction: &Prediction) -> f64 {

// Compare run with prediction to determine confidence

let mut best_match_confidence = 0.0;

for (pred_start, pred_end) in prediction.boundaries.windows(2).step_by(2) {

let overlap = run.overlap(&ContinuousRun::new(*pred_start, *pred_end, 0, run.row));

let overlap_ratio = overlap / run.length().max(*pred_end - *pred_start);

if overlap_ratio > best_match_confidence {

best_match_confidence = overlap_ratio;

}

}

best_match_confidence.max(0.1) // Minimum confidence

}

/// Analyze geometric properties of runs

fn analyze_geometry(&self, runs: &mut [ContinuousRun]) {

for i in 0..runs.len() {

let mut geometric_props = GeometricProperties::default();

// Compute curvature based on neighboring runs

geometric_props.curvature = self.compute_curvature(runs, i);

// Compute smoothness measure

geometric_props.smoothness = self.compute_smoothness(runs, i);

// Compute gradient/orientation

geometric_props.gradient = self.compute_gradient(runs, i);

// Classify primitive type

geometric_props.primitive_type = self.classify_primitive(&geometric_props);

// Compute arc length

geometric_props.arc_length = runs[i].length();

runs[i].geometric_props = geometric_props;

}

}

/// Compute local curvature measure

fn compute_curvature(&self, runs: &[ContinuousRun], index: usize) -> f64 {

if index == 0 || index >= runs.len() - 1 {

return 0.0;

}

let prev = &runs[index - 1];

let curr = &runs[index];

let next = &runs[index + 1];

// Second derivative approximation

let d2 = (next.center() - curr.center()) - (curr.center() - prev.center());

d2.abs()

}

/// Compute smoothness measure

fn compute_smoothness(&self, runs: &[ContinuousRun], index: usize) -> f64 {

let window_size = self.analysis_config.smoothness_window;

let start = index.saturating_sub(window_size / 2);

let end = (index + window_size / 2 + 1).min(runs.len());

if end - start < 3 {

return 1.0; // Assume smooth for short sequences

}

let mut variance = 0.0;

let window_runs = &runs[start..end];

let mean_length = window_runs.iter().map(|r| r.length()).sum::<f64>() / window_runs.len() as f64;

for run in window_runs {

let diff = run.length() - mean_length;

variance += diff * diff;

}

variance /= window_runs.len() as f64;

// Convert variance to smoothness (higher variance = lower smoothness)

1.0 / (1.0 + variance)

}

/// Compute gradient/orientation vector

fn compute_gradient(&self, runs: &[ContinuousRun], index: usize) -> Vector2<f64> {

if index == 0 || index >= runs.len() - 1 {

return Vector2::zeros();

}

let curr = &runs[index];

let next = &runs[index + 1];

let dx = next.center() - curr.center();

let dy = 1.0; // Scanline spacing

Vector2::new(dx, dy).normalize()

}

/// Classify geometric primitive type

fn classify_primitive(&self, props: &GeometricProperties) -> PrimitiveType {

if props.curvature > 0.5 {

if props.smoothness < 0.3 {

PrimitiveType::Corner

} else {

PrimitiveType::Curve

}

} else if props.smoothness > 0.8 {

if props.curvature < 0.1 {

PrimitiveType::Line

} else {

PrimitiveType::Smooth

}

} else if props.smoothness < 0.3 {

PrimitiveType::Noise

} else {

PrimitiveType::Edge

}

}

/// Compute precision requirements for each run

fn compute_precision_requirements(&self, runs: &mut [ContinuousRun], prediction: &Prediction) {

for run in runs.iter_mut() {

let base_precision = self.precision_config.base_precision;

if self.precision_config.confidence_scaling {

// Lower confidence requires higher precision

let confidence_factor = 1.0 / (1.0 + run.confidence);

let geometric_factor = 1.0 + run.geometric_props.curvature;

let required_precision = base_precision * confidence_factor * geometric_factor;

// Clamp to configured limits

let clamped_precision = required_precision

.max(self.precision_config.min_precision)

.min(self.precision_config.max_precision);

// Store precision requirement (could be used for adaptive encoding)

run.confidence = run.confidence.min(clamped_precision);

}

}

}

/// Compute spatial relationships between scanlines

fn compute_spatial_relationships(&self, encoded_scanlines: &mut [EncodedScanline]) {

for i in 1..encoded_scanlines.len() {

let (prev_slice, curr_slice) = encoded_scanlines.split_at_mut(i);

let prev_scanline = &prev_slice[i - 1];

let curr_scanline = &mut curr_slice[0];

for (run_idx, run) in curr_scanline.runs.iter_mut().enumerate() {

// Find overlapping runs in previous scanline

let mut vertical_coherence = 0.0;

let mut neighbor_count = 0;

for (prev_idx, prev_run) in prev_scanline.runs.iter().enumerate() {

let overlap = run.overlap(prev_run);

if overlap > 0.0 {

let overlap_ratio = overlap / run.length().max(prev_run.length());

vertical_coherence += overlap_ratio;

neighbor_count += 1;

// Add to vertical neighbors

run.spatial_rels.vertical_neighbors.push(

crate::continuous_run::RunId {

scanline: prev_scanline.row,

index: prev_idx as u32,

}

);

}

}

if neighbor_count > 0 {

vertical_coherence /= neighbor_count as f64;

}

run.spatial_rels.vertical_coherence = vertical_coherence;

// Add horizontal neighbors within same scanline

if run_idx > 0 {

run.spatial_rels.horizontal_neighbors.push(

crate::continuous_run::RunId {

scanline: curr_scanline.row,

index: (run_idx - 1) as u32,

}

);

}

if run_idx < curr_scanline.runs.len() - 1 {

run.spatial_rels.horizontal_neighbors.push(

crate::continuous_run::RunId {

scanline: curr_scanline.row,

index: (run_idx + 1) as u32,

}

);

}

}

}

}

/// Compute scanline statistics

fn compute_scanline_statistics(&self, runs: &[ContinuousRun], prediction: &Prediction) -> ScanlineStatistics {

let run_count = runs.len();

let avg_run_length = if run_count > 0 {

runs.iter().map(|r| r.length()).sum::<f64>() / run_count as f64

} else {

0.0

};

let run_length_variance = if run_count > 1 {

let variance_sum = runs.iter()

.map(|r| (r.length() - avg_run_length).powi(2))

.sum::<f64>();

variance_sum / (run_count - 1) as f64

} else {

0.0

};

let geometric_complexity = runs.iter()

.map(|r| r.geometric_props.curvature + (1.0 - r.geometric_props.smoothness))

.sum::<f64>() / run_count.max(1) as f64;

let prediction_accuracy = runs.iter()

.map(|r| r.confidence)

.sum::<f64>() / run_count.max(1) as f64;

ScanlineStatistics {

run_count,

avg_run_length,

run_length_variance,

geometric_complexity,

prediction_accuracy,

}

}

/// Compute encoding metrics

fn compute_encoding_metrics(&self, runs: &[ContinuousRun], duration: std::time::Duration) -> EncodingMetrics {

// Estimate encoding sizes

let coordinate_bytes = runs.len() * 16; // 2 x f64 coordinates per run

let value_bytes = runs.len() * 4; // u32 value per run

let metadata_bytes = runs.len() * 64; // Estimated metadata size

// Compare with discrete RLE

let discrete_rle_size = runs.len() * 8; // Simple count + value encoding

let total_size = coordinate_bytes + value_bytes + metadata_bytes;

let compression_ratio = discrete_rle_size as f64 / total_size as f64;

EncodingMetrics {

coordinate_bytes,

value_bytes,

metadata_bytes,

compression_ratio,

encoding_time_us: duration.as_micros() as u64,

}

}

}

impl Default for PrecisionConfig {

fn default() -> Self {

Self {

base_precision: 1e-4,

confidence_scaling: true,

max_precision: 1e-6,

min_precision: 1e-3,

}

}

}

impl Default for AnalysisConfig {

fn default() -> Self {

Self {

geometric_analysis: true,

spatial_relationships: true,

temporal_analysis: false,

smoothness_window: 5,

}

}

}

4. Analysis Module Implementation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

649

650

651

652

653

654

655

656

657

658

659

660

661

662

663

664

665

666

667

668

669

670

671

672

673

674

675

676

677

678

679

680

681

682

683

684

685

686

687

688

689

690

691

692

693

694

695

696

697

698

699

700

701

702

703

704

705

706

707

708

709

710

711

712

713

714

715

716

717

718

719

720

721

722

723

724

725

726

727

728

729

730

731

732

733

734

735

736

737

738

739

740

741

742

743

744

745

746

747

748

749

750

751

752

753

754

755

756

757

758

759

760

761

762

763

764

765

766

767

768

769

770

771

772

773

774

775

776

777

778

779

780

781

782

783

784

785

786

787

788

789

790

791

792

793

794

795

796

797

798

799

800

801

802

803

804

805

806

807

808

809

810

811

812

813

814

// src/analysis/mod.rs

pub mod geometric;

pub mod spatial_index;

pub mod quality;

use crate::continuous_run::{ContinuousRun, RunId};

use nalgebra::Point2;

/// Analysis results from CEP-RLE compressed data

#[derive(Debug, Clone)]

pub struct AnalysisResult {

/// Detected geometric features

pub geometric_features: Vec<GeometricFeature>,

/// Spatial query interface

pub spatial_index: spatial_index::SpatialIndex,

/// Quality assessment metrics

pub quality_metrics: quality::QualityMetrics,

}

/// Geometric feature extracted from continuous runs

#[derive(Debug, Clone)]

pub struct GeometricFeature {

/// Feature type

pub feature_type: FeatureType,

/// Control points or boundary points

pub points: Vec<Point2<f64>>,

/// Feature confidence [0.0, 1.0]

pub confidence: f64,

/// Associated run IDs

pub run_ids: Vec<RunId>,

}

/// Types of geometric features

#[derive(Debug, Clone, Copy, PartialEq, Eq)]

pub enum FeatureType {

Line,

Curve,

Corner,

Rectangle,

Circle,

Polygon,

}

/// Direct analysis interface for CEP-RLE data

pub struct DirectAnalyzer {

/// Geometric feature extractor

geometric_analyzer: geometric::GeometricAnalyzer,

/// Spatial indexing system

spatial_indexer: spatial_index::SpatialIndexer,

/// Quality assessment system

quality_assessor: quality::QualityAssessor,

}

impl DirectAnalyzer {

pub fn new() -> Self {

Self {

geometric_analyzer: geometric::GeometricAnalyzer::new(),

spatial_indexer: spatial_index::SpatialIndexer::new(),

quality_assessor: quality::QualityAssessor::new(),

}

}

/// Perform complete analysis on encoded data

pub fn analyze(&mut self, encoded_data: &[crate::encoder::EncodedScanline]) -> AnalysisResult {

// Extract all runs for analysis

let all_runs: Vec<&ContinuousRun> = encoded_data

.iter()

.flat_map(|scanline| &scanline.runs)

.collect();

// Geometric feature extraction

let geometric_features = self.geometric_analyzer.extract_features(&all_runs);

// Build spatial index

let spatial_index = self.spatial_indexer.build_index(&all_runs);

// Quality assessment

let quality_metrics = self.quality_assessor.assess_quality(&all_runs, encoded_data);

AnalysisResult {

geometric_features,

spatial_index,

quality_metrics,

}

}

}

// src/analysis/geometric.rs

use super::{GeometricFeature, FeatureType};

use crate::continuous_run::{ContinuousRun, PrimitiveType};

use nalgebra::Point2;

/// Geometric feature extraction from continuous runs

pub struct GeometricAnalyzer {

/// Minimum confidence for feature detection

min_confidence: f64,

/// Line detection tolerance

line_tolerance: f64,

/// Curve fitting tolerance

curve_tolerance: f64,

}

impl GeometricAnalyzer {

pub fn new() -> Self {

Self {

min_confidence: 0.7,

line_tolerance: 0.1,

curve_tolerance: 0.2,

}

}

/// Extract geometric features from runs

pub fn extract_features(&self, runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

let mut features = Vec::new();

// Group runs by primitive type

let lines = self.group_by_primitive(runs, PrimitiveType::Line);

let curves = self.group_by_primitive(runs, PrimitiveType::Curve);

let corners = self.group_by_primitive(runs, PrimitiveType::Corner);

// Extract line features

features.extend(self.extract_lines(&lines));

// Extract curve features

features.extend(self.extract_curves(&curves));

// Extract corner features

features.extend(self.extract_corners(&corners));

// Extract composite features (rectangles, circles)

features.extend(self.extract_composite_features(runs));

features

}

/// Group runs by primitive type

fn group_by_primitive(&self, runs: &[&ContinuousRun], primitive_type: PrimitiveType) -> Vec<&ContinuousRun> {

runs.iter()

.filter(|run| run.geometric_props.primitive_type == primitive_type)

.cloned()

.collect()

}

/// Extract line features from line-classified runs

fn extract_lines(&self, line_runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

let mut features = Vec::new();

// Connect adjacent line runs into longer line segments

let line_segments = self.connect_line_runs(line_runs);

for segment in line_segments {

if segment.len() >= 2 {

let start_run = segment[0];

let end_run = segment[segment.len() - 1];

let start_point = Point2::new(start_run.center(), start_run.row as f64);

let end_point = Point2::new(end_run.center(), end_run.row as f64);

let confidence = segment.iter()

.map(|run| run.confidence)

.sum::<f64>() / segment.len() as f64;

let run_ids = segment.iter()

.enumerate()

.map(|(i, run)| crate::continuous_run::RunId {

scanline: run.row,

index: i as u32,

})

.collect();

features.push(GeometricFeature {

feature_type: FeatureType::Line,

points: vec![start_point, end_point],

confidence,

run_ids,

});

}

}

features

}

/// Connect adjacent line runs into segments

fn connect_line_runs(&self, line_runs: &[&ContinuousRun]) -> Vec<Vec<&ContinuousRun>> {

let mut segments = Vec::new();

let mut visited = vec![false; line_runs.len()];

for i in 0..line_runs.len() {

if visited[i] {

continue;

}

let mut segment = vec![line_runs[i]];

visited[i] = true;

// Extend segment forward and backward

self.extend_segment(&mut segment, line_runs, &mut visited, i);

if segment.len() > 1 {

segments.push(segment);

}

}

segments

}

/// Extend a line segment by finding connected runs

fn extend_segment(

&self,

segment: &mut Vec<&ContinuousRun>,

all_runs: &[&ContinuousRun],

visited: &mut [bool],

start_idx: usize,

) {

let current_run = all_runs[start_idx];

// Look for adjacent runs in consecutive scanlines

for (i, run) in all_runs.iter().enumerate() {

if visited[i] || (run.row as i32 - current_run.row as i32).abs() != 1 {

continue;

}

// Check if runs are aligned (indicating continuation of line)

let position_diff = (run.center() - current_run.center()).abs();

if position_diff < self.line_tolerance {

segment.push(run);

visited[i] = true;

// Recursively extend from this run

self.extend_segment(segment, all_runs, visited, i);

}

}

}

/// Extract curve features

fn extract_curves(&self, curve_runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

let mut features = Vec::new();

// Group curve runs into connected components

let curve_chains = self.build_curve_chains(curve_runs);

for chain in curve_chains {

if chain.len() >= 3 {

// Fit curve to chain of runs

let control_points = self.fit_curve_to_chain(&chain);

let confidence = chain.iter()

.map(|run| run.confidence)

.sum::<f64>() / chain.len() as f64;

let run_ids = chain.iter()

.enumerate()

.map(|(i, run)| crate::continuous_run::RunId {

scanline: run.row,

index: i as u32,

})

.collect();

features.push(GeometricFeature {

feature_type: FeatureType::Curve,

points: control_points,

confidence,

run_ids,

});

}

}

features

}

/// Build chains of connected curve runs

fn build_curve_chains(&self, curve_runs: &[&ContinuousRun]) -> Vec<Vec<&ContinuousRun>> {

// Similar to line connection but with curve tolerance

let mut chains = Vec::new();

let mut visited = vec![false; curve_runs.len()];

for i in 0..curve_runs.len() {

if visited[i] {

continue;

}

let mut chain = vec![curve_runs[i]];

visited[i] = true;

// Build chain using curve connectivity

self.build_curve_chain(&mut chain, curve_runs, &mut visited, i);

if chain.len() >= 3 {

chains.push(chain);

}

}

chains

}

/// Build a single curve chain

fn build_curve_chain(

&self,

chain: &mut Vec<&ContinuousRun>,

all_runs: &[&ContinuousRun],

visited: &mut [bool],

start_idx: usize,

) {

let current_run = all_runs[start_idx];

for (i, run) in all_runs.iter().enumerate() {

if visited[i] || (run.row as i32 - current_run.row as i32).abs() != 1 {

continue;

}

// Check curve continuity

let position_diff = (run.center() - current_run.center()).abs();

let curvature_compatibility = (run.geometric_props.curvature - current_run.geometric_props.curvature).abs();

if position_diff < self.curve_tolerance && curvature_compatibility < 0.3 {

chain.push(run);

visited[i] = true;

self.build_curve_chain(chain, all_runs, visited, i);

}

}

}

/// Fit curve to chain of runs

fn fit_curve_to_chain(&self, chain: &[&ContinuousRun]) -> Vec<Point2<f64>> {

// Simple Bézier curve fitting - in practice would use more sophisticated methods

let mut points = Vec::new();

if chain.len() >= 2 {

// Start point

points.push(Point2::new(chain[0].center(), chain[0].row as f64));

// Control points (simplified - would use curve fitting algorithms)

if chain.len() >= 4 {

let mid1 = chain.len() / 3;

let mid2 = 2 * chain.len() / 3;

points.push(Point2::new(chain[mid1].center(), chain[mid1].row as f64));

points.push(Point2::new(chain[mid2].center(), chain[mid2].row as f64));

}

// End point

let last = chain.len() - 1;

points.push(Point2::new(chain[last].center(), chain[last].row as f64));

}

points

}

/// Extract corner features

fn extract_corners(&self, corner_runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

corner_runs.iter()

.filter(|run| run.confidence >= self.min_confidence)

.map(|run| {

let point = Point2::new(run.center(), run.row as f64);

GeometricFeature {

feature_type: FeatureType::Corner,

points: vec![point],

confidence: run.confidence,

run_ids: vec![crate::continuous_run::RunId {

scanline: run.row,

index: 0, // Simplified

}],

}

})

.collect()

}

/// Extract composite features (rectangles, circles, etc.)

fn extract_composite_features(&self, runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

let mut features = Vec::new();

// Rectangle detection using spatial patterns

features.extend(self.detect_rectangles(runs));

// Circle detection using curvature analysis

features.extend(self.detect_circles(runs));

features

}

/// Detect rectangular features

fn detect_rectangles(&self, runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

// Simplified rectangle detection - would use more sophisticated algorithms

Vec::new()

}

/// Detect circular features

fn detect_circles(&self, runs: &[&ContinuousRun]) -> Vec<GeometricFeature> {

// Simplified circle detection based on curvature patterns

Vec::new()

}

}

// src/analysis/spatial_index.rs

use crate::continuous_run::{ContinuousRun, RunId};

use nalgebra::{Point2, Vector2};

use kiddo::KdTree;

use rstar::{RTree, AABB};

/// Spatial indexing for efficient queries on continuous runs

pub struct SpatialIndex {

/// K-d tree for point queries

kdtree: KdTree<f64, RunId, 2>,

/// R-tree for range queries

rtree: RTree<SpatialRun>,

/// Run lookup table

run_lookup: std::collections::HashMap<RunId, ContinuousRun>,

}

/// Spatial run wrapper for R-tree

#[derive(Debug, Clone)]

pub struct SpatialRun {

pub run_id: RunId,

pub envelope: AABB<Point2<f64>>,

pub center: Point2<f64>,

}

/// Spatial indexer for building indices

pub struct SpatialIndexer {

/// Spatial bin size for indexing

bin_size: f64,

}

impl SpatialIndexer {

pub fn new() -> Self {

Self {

bin_size: 10.0,

}

}

/// Build spatial index from runs

pub fn build_index(&self, runs: &[&ContinuousRun]) -> SpatialIndex {

let mut kdtree = KdTree::new();

let mut spatial_runs = Vec::new();

let mut run_lookup = std::collections::HashMap::new();

for (i, run) in runs.iter().enumerate() {

let run_id = RunId {

scanline: run.row,

index: i as u32,

};

let center = Point2::new(run.center(), run.row as f64);

let min_point = Point2::new(run.x_start, run.row as f64);

let max_point = Point2::new(run.x_end, run.row as f64);

// Add to k-d tree

kdtree.add([run.center(), run.row as f64], run_id).unwrap();

// Add to R-tree

let spatial_run = SpatialRun {

run_id,

envelope: AABB::from_corners(min_point, max_point),

center,

};

spatial_runs.push(spatial_run);

// Add to lookup table

run_lookup.insert(run_id, (*run).clone());

}

let rtree = RTree::bulk_load(spatial_runs);

SpatialIndex {

kdtree,

rtree,

run_lookup,

}

}

}

impl SpatialIndex {

/// Find runs within radius of a point

pub fn query_radius(&self, center: Point2<f64>, radius: f64) -> Vec<&ContinuousRun> {

let mut results = Vec::new();

let neighbors = self.kdtree.within_unsorted(&[center.x, center.y], radius);

for (_, run_id) in neighbors {

if let Some(run) = self.run_lookup.get(&run_id) {

results.push(run);

}

}

results

}

/// Find runs within a bounding box

pub fn query_bbox(&self, min: Point2<f64>, max: Point2<f64>) -> Vec<&ContinuousRun> {

let query_envelope = AABB::from_corners(min, max);

self.rtree

.locate_in_envelope(&query_envelope)

.filter_map(|spatial_run| self.run_lookup.get(&spatial_run.run_id))

.collect()

}

/// Find nearest neighbors to a point

pub fn nearest_neighbors(&self, point: Point2<f64>, k: usize) -> Vec<&ContinuousRun> {

let neighbors = self.kdtree.nearest_n(&[point.x, point.y], k);

neighbors.iter()

.filter_map(|(_, run_id)| self.run_lookup.get(run_id))

.collect()

}

}

impl rstar::RTreeObject for SpatialRun {

type Envelope = AABB<Point2<f64>>;

fn envelope(&self) -> Self::Envelope {

self.envelope

}

}

impl rstar::PointDistance for SpatialRun {

fn distance_2(&self, point: &Point2<f64>) -> f64 {

(self.center - point).norm_squared()

}

}

// src/analysis/quality.rs

use crate::{continuous_run::ContinuousRun, encoder::EncodedScanline};

/// Quality assessment metrics for CEP-RLE

#[derive(Debug, Clone)]

pub struct QualityMetrics {

/// Overall compression quality [0.0, 1.0]

pub overall_quality: f64,

/// Geometric preservation accuracy

pub geometric_accuracy: f64,

/// Spatial coherence measure

pub spatial_coherence: f64,

/// Prediction accuracy

pub prediction_accuracy: f64,

/// Analysis readiness score

pub analysis_readiness: f64,

/// Detailed quality breakdown

pub detailed_metrics: DetailedQualityMetrics,

}

/// Detailed quality breakdown

#[derive(Debug, Clone)]

pub struct DetailedQualityMetrics {

/// Per-scanline quality scores

pub scanline_quality: Vec<f64>,

/// Per-primitive-type quality

pub primitive_quality: std::collections::HashMap<crate::continuous_run::PrimitiveType, f64>,

/// Spatial quality distribution

pub spatial_quality_map: Vec<Vec<f64>>,

/// Temporal consistency (for video)

pub temporal_consistency: Option<f64>,

}

/// Quality assessor

pub struct QualityAssessor {

/// Quality assessment parameters

params: QualityParams,

}

/// Quality assessment parameters

#[derive(Debug, Clone)]

pub struct QualityParams {

/// Weight for geometric accuracy

pub geometric_weight: f64,

/// Weight for spatial coherence

pub spatial_weight: f64,

/// Weight for prediction accuracy

pub prediction_weight: f64,

/// Minimum confidence threshold

pub min_confidence: f64,

}

impl QualityAssessor {

pub fn new() -> Self {

Self {

params: QualityParams::default(),

}

}

/// Assess quality of encoded data

pub fn assess_quality(

&self,

runs: &[&ContinuousRun],

encoded_scanlines: &[EncodedScanline],

) -> QualityMetrics {

// Geometric accuracy assessment

let geometric_accuracy = self.assess_geometric_accuracy(runs);

// Spatial coherence assessment

let spatial_coherence = self.assess_spatial_coherence(runs);

// Prediction accuracy assessment

let prediction_accuracy = self.assess_prediction_accuracy(encoded_scanlines);

// Analysis readiness assessment

let analysis_readiness = self.assess_analysis_readiness(runs);

// Compute overall quality

let overall_quality = self.params.geometric_weight * geometric_accuracy

+ self.params.spatial_weight * spatial_coherence

+ self.params.prediction_weight * prediction_accuracy;

// Detailed metrics

let detailed_metrics = self.compute_detailed_metrics(runs, encoded_scanlines);

QualityMetrics {

overall_quality,

geometric_accuracy,

spatial_coherence,

prediction_accuracy,

analysis_readiness,

detailed_metrics,

}

}

/// Assess geometric accuracy

fn assess_geometric_accuracy(&self, runs: &[&ContinuousRun]) -> f64 {

if runs.is_empty() {

return 0.0;

}

let mut total_accuracy = 0.0;

for run in runs {

// Assess boundary precision

let boundary_precision = if run.length() > 0.0 {

1.0 / (1.0 + (run.x_end - run.x_start).fract().abs())

} else {

0.0

};

// Assess geometric property consistency

let property_consistency = self.assess_property_consistency(run);

// Combine metrics

let run_accuracy = run.confidence * boundary_precision * property_consistency;

total_accuracy += run_accuracy;

}

total_accuracy / runs.len() as f64

}

/// Assess property consistency for a run

fn assess_property_consistency(&self, run: &ContinuousRun) -> f64 {

let props = &run.geometric_props;

// Check consistency between curvature and primitive type

let curvature_consistency = match props.primitive_type {

crate::continuous_run::PrimitiveType::Line => {

if props.curvature < 0.1 { 1.0 } else { 1.0 - props.curvature }

}

crate::continuous_run::PrimitiveType::Curve => {

if props.curvature > 0.3 { 1.0 } else { props.curvature / 0.3 }

}

crate::continuous_run::PrimitiveType::Corner => {

if props.curvature > 0.5 { 1.0 } else { props.curvature / 0.5 }

}

_ => 0.8, // Default consistency for other types

};

// Check smoothness consistency

let smoothness_consistency = match props.primitive_type {

crate::continuous_run::PrimitiveType::Smooth => props.smoothness,

crate::continuous_run::PrimitiveType::Noise => 1.0 - props.smoothness,

_ => 0.8,

};

(curvature_consistency + smoothness_consistency) / 2.0

}

/// Assess spatial coherence

fn assess_spatial_coherence(&self, runs: &[&ContinuousRun]) -> f64 {

if runs.len() < 2 {

return 1.0;

}

let mut total_coherence = 0.0;

let mut coherence_count = 0;

for run in runs {

if !run.spatial_rels.vertical_neighbors.is_empty() {

total_coherence += run.spatial_rels.vertical_coherence;

coherence_count += 1;

}

}

if coherence_count > 0 {

total_coherence / coherence_count as f64

} else {

0.5 // Default coherence when no vertical relationships

}

}

/// Assess prediction accuracy

fn assess_prediction_accuracy(&self, encoded_scanlines: &[EncodedScanline]) -> f64 {

if encoded_scanlines.is_empty() {

return 0.0;

}

let total_accuracy: f64 = encoded_scanlines

.iter()

.map(|scanline| scanline.statistics.prediction_accuracy)

.sum();

total_accuracy / encoded_scanlines.len() as f64

}

/// Assess analysis readiness

fn assess_analysis_readiness(&self, runs: &[&ContinuousRun]) -> f64 {

if runs.is_empty() {

return 0.0;

}

let mut readiness_scores = Vec::new();

for run in runs {

let mut score = 0.0;

// Geometric properties completeness

if run.geometric_props.curvature >= 0.0 { score += 0.2; }

if run.geometric_props.smoothness >= 0.0 { score += 0.2; }

if run.geometric_props.gradient.norm() > 0.0 { score += 0.2; }

// Spatial relationships completeness

if run.spatial_rels.vertical_coherence > 0.0 { score += 0.2; }

if !run.spatial_rels.horizontal_neighbors.is_empty() ||

!run.spatial_rels.vertical_neighbors.is_empty() { score += 0.2; }

readiness_scores.push(score);

}

readiness_scores.iter().sum::<f64>() / runs.len() as f64

}

/// Compute detailed quality metrics

fn compute_detailed_metrics(

&self,

runs: &[&ContinuousRun],

encoded_scanlines: &[EncodedScanline],

) -> DetailedQualityMetrics {

// Per-scanline quality

let scanline_quality: Vec<f64> = encoded_scanlines

.iter()

.map(|scanline| {

let geometric_quality = scanline.runs.iter()

.map(|r| r.confidence * r.geometric_props.smoothness)

.sum::<f64>() / scanline.runs.len().max(1) as f64;

let prediction_quality = scanline.statistics.prediction_accuracy;

(geometric_quality + prediction_quality) / 2.0

})

.collect();

// Per-primitive-type quality

let mut primitive_quality = std::collections::HashMap::new();

let mut primitive_counts = std::collections::HashMap::new();

for run in runs {

let ptype = run.geometric_props.primitive_type;

let quality = run.confidence * run.geometric_props.smoothness;

*primitive_quality.entry(ptype).or_insert(0.0) += quality;

*primitive_counts.entry(ptype).or_insert(0) += 1;

}

for (ptype, total_quality) in primitive_quality.iter_mut() {

if let Some(count) = primitive_counts.get(ptype) {

*total_quality /= *count as f64;

}

}

// Spatial quality map (simplified grid-based)

let grid_size = 16;

let mut spatial_quality_map = vec![vec![0.0; grid_size]; grid_size];

let mut spatial_counts = vec![vec![0; grid_size]; grid_size];

for run in runs {

let x_grid = ((run.center() / 100.0) as usize).min(grid_size - 1);

let y_grid = ((run.row as f64 / 10.0) as usize).min(grid_size - 1);

spatial_quality_map[y_grid][x_grid] += run.confidence;

spatial_counts[y_grid][x_grid] += 1;

}

// Normalize spatial quality map

for i in 0..grid_size {

for j in 0..grid_size {

if spatial_counts[i][j] > 0 {

spatial_quality_map[i][j] /= spatial_counts[i][j] as f64;

}

}

}

DetailedQualityMetrics {

scanline_quality,

primitive_quality,

spatial_quality_map,

temporal_consistency: None, // Not implemented for static images

}

}

}

impl Default for QualityParams {

fn default() -> Self {

Self {

geometric_weight: 0.4,

spatial_weight: 0.3,

prediction_weight: 0.3,

min_confidence: 0.1,

}

}

}

5. Performance Benchmarking Framework

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

// benches/compression_bench.rs

use criterion::{black_box, criterion_group, criterion_main, Criterion, BenchmarkId};

use cep_rle::{

encoder::{CepRleEncoder, PrecisionConfig, AnalysisConfig},

test_data::synthetic::SyntheticImageGenerator,

};

use nalgebra::DMatrix;

/// Benchmark compression performance across different image types and sizes

pub fn compression_benchmarks(c: &mut Criterion) {

let mut group = c.benchmark_group("compression");

// Test different image sizes

let sizes = vec![256, 512, 1024, 2048];

let image_types = vec!["lines", "curves", "mixed", "noise"];

for size in sizes {

for image_type in &image_types {

let image = generate_test_image(size, size, image_type);

group.bench_with_input(

BenchmarkId::new("ceprle_encode", format!("{}x{}_{}", size, size, image_type)),

&image,

|b, img| {

let mut encoder = CepRleEncoder::new(

size,

size,

PrecisionConfig::default(),

AnalysisConfig::default(),

);

b.iter(|| {

black_box(encoder.encode_image(black_box(img)))

});

},

);

}

}

group.finish();